Federated Logistic Regression¶

Logistic Regression(LR) is a widely used statistic model for classification problems. FATE provided two kinds of federated LR: Homogeneous LR (HomoLR) and Heterogeneous LR (HeteroLR and Hetero_SSHE_LR).

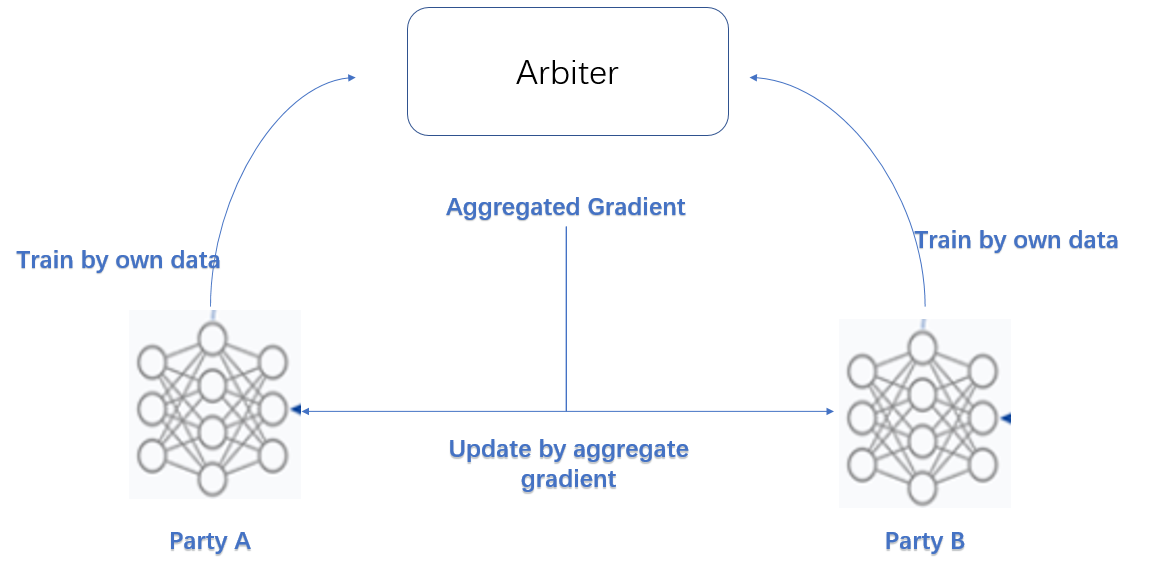

We simplified the federation process into three parties. Party A represents Guest, party B represents Host while party C, which also known as "Arbiter", is a third party that holds a private key for each party and work as a coordinator. (Hetero_SSHE_LR have not "Arbiter" role)

Homogeneous LR¶

As the name suggested, in HomoLR, the feature spaces of guest and hosts are identical. An optional encryption mode for computing gradients is provided for host parties. By doing this, the plain model is not available for this host any more.

Models of Party A and Party B have the same structure. In each iteration, each party trains its model on its own data. After that, all parties upload their encrypted (or plain, depends on your configuration) gradients to arbiter. The arbiter aggregates these gradients to form a federated gradient that will then be distributed to all parties for updating their local models. Similar to traditional LR, the training process will stop when the federated model converges or the whole training process reaches a predefined max-iteration threshold. More details is available in this Practical Secure Aggregation for Privacy-Preserving Machine Learning.

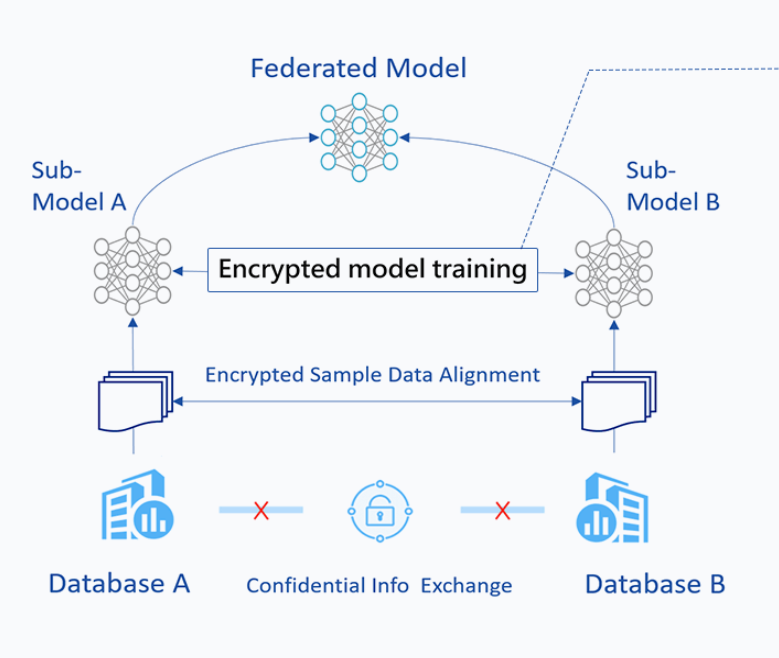

Heterogeneous LR¶

The HeteroLR carries out the federated learning in a different way. As shown in Figure 2, A sample alignment process is conducted before training. This sample alignment process is to identify overlapping samples stored in databases of the two involved parties. The federated model is built based on those overlapping samples. The whole sample alignment process will not leak confidential information (e.g., sample ids) on the two parties since it is conducted in an encrypted way.

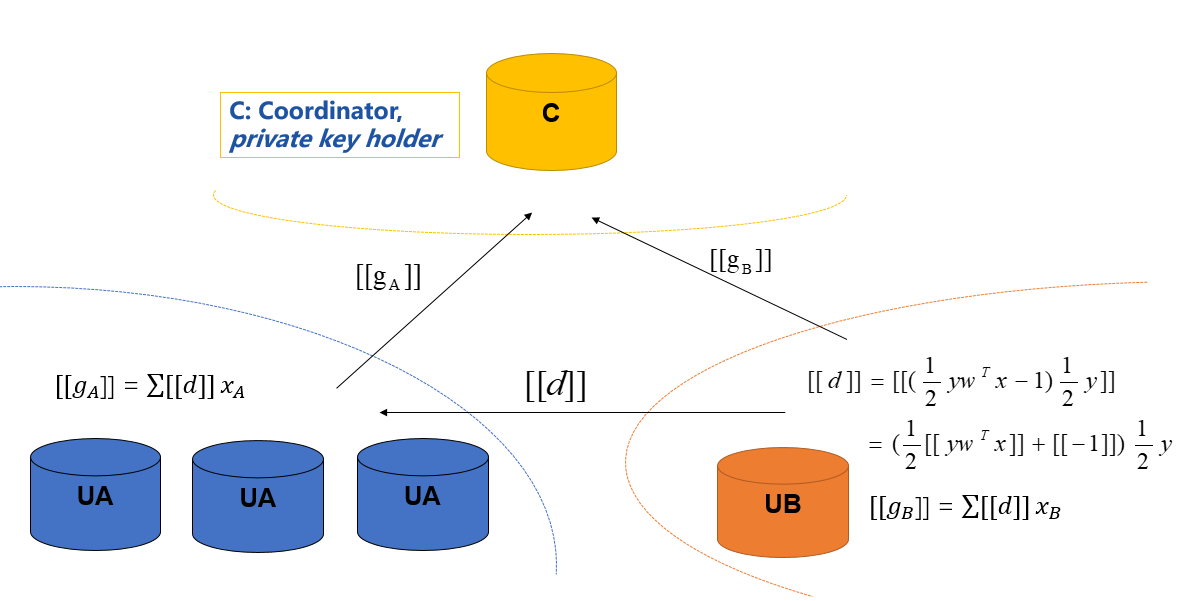

In the training process, party A and party B compute out the elements needed for final gradients. Arbiter aggregate them and compute out the gradient and then transfer back to each party. More details is available in this: Private federated learning on vertically partitioned data via entity resolution and additively homomorphic encryption.

Multi-host hetero-lr¶

For multi-host scenario, the gradient computation still keep the same as single-host case. However, we use the second-norm of the difference of model weights between two consecutive iterations as the convergence criterion. Since the arbiter can obtain the completed model weight, the convergence decision is happening in Arbiter.

Heterogeneous SSHE Logistic Regression¶

FATE implements a heterogeneous logistic regression without arbiter role

called for hetero_sshe_lr. More details is available in this

following paper: When Homomorphic Encryption Marries Secret Sharing:

Secure Large-Scale Sparse Logistic Regression and Applications

in Risk Control.

We have also made some optimization so that the code may not exactly

same with this paper.

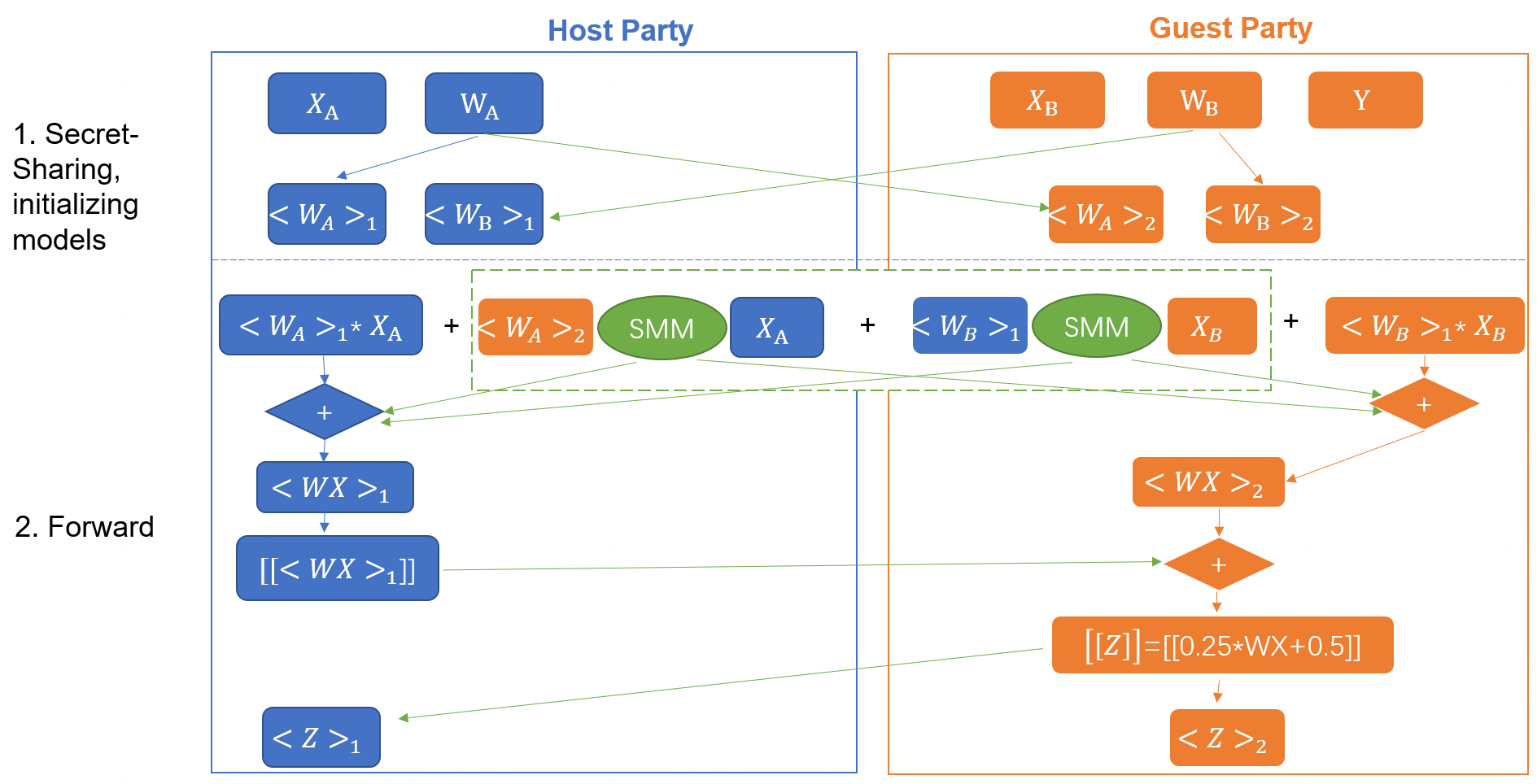

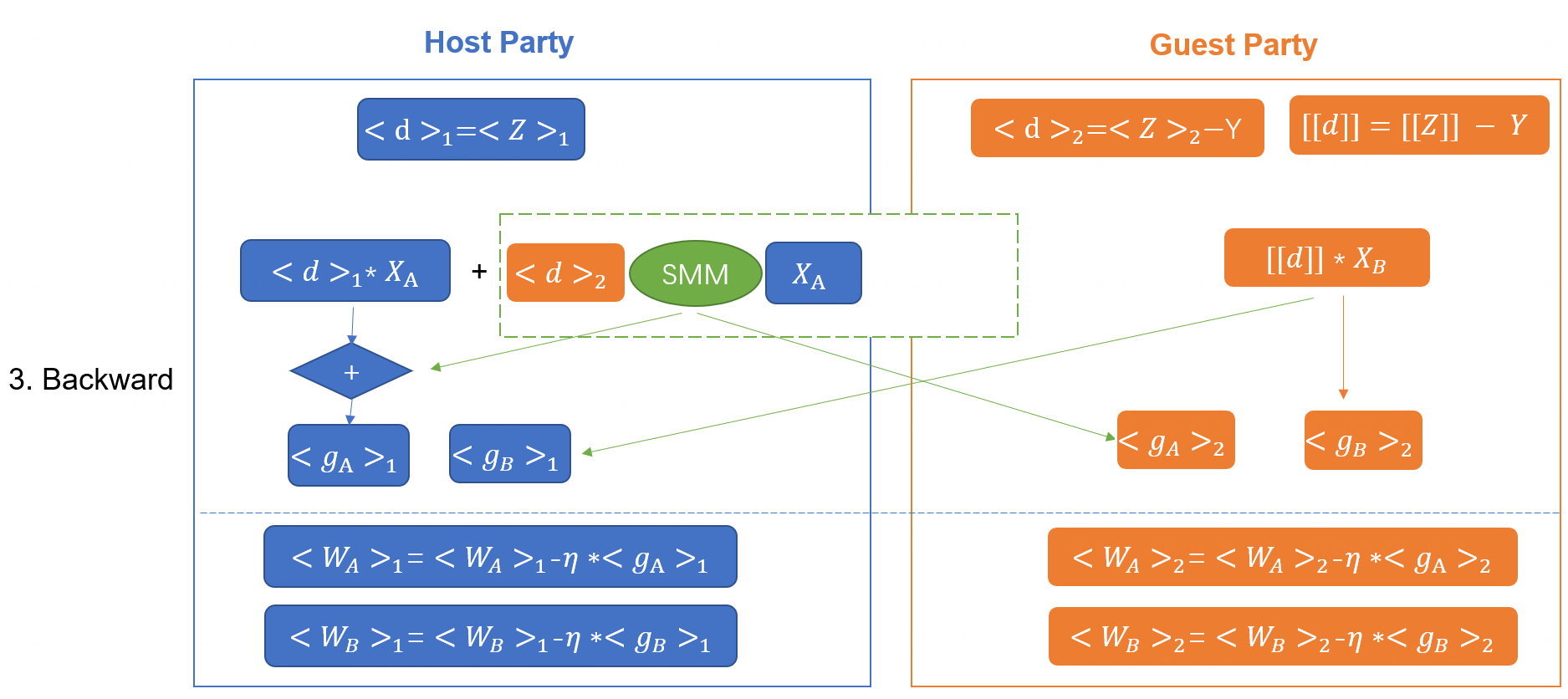

The training process could be described as the

following: forward and backward process.

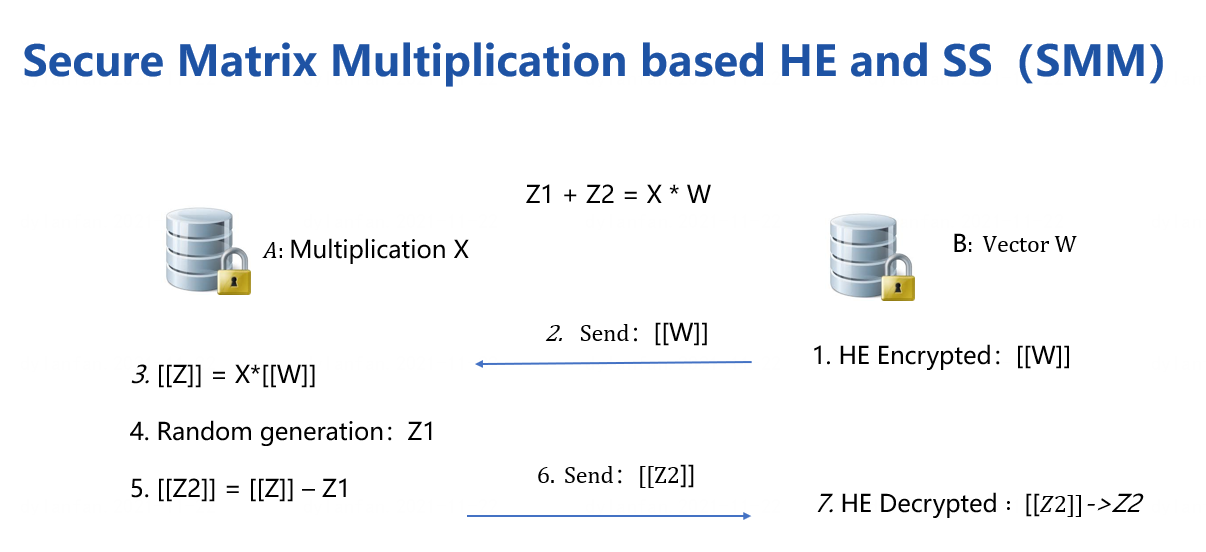

The training process is based secure matrix multiplication protocol(SMM),

which HE and Secret-Sharing hybrid protocol is included.

Param¶

logistic_regression_param

¶

deprecated_param_list

¶

Classes¶

LogisticParam (BaseParam)

¶

Parameters used for Logistic Regression both for Homo mode or Hetero mode.

Parameters:

| Name | Type | Description | Default |

|---|---|---|---|

penalty |

{'L2', 'L1' or None} |

Penalty method used in LR. Please note that, when using encrypted version in HomoLR, 'L1' is not supported. |

'L2' |

tol |

float, default: 1e-4 |

The tolerance of convergence |

0.0001 |

alpha |

float, default: 1.0 |

Regularization strength coefficient. |

1.0 |

optimizer |

{'rmsprop', 'sgd', 'adam', 'nesterov_momentum_sgd', 'sqn', 'adagrad'}, default: 'rmsprop' |

Optimize method, if 'sqn' has been set, sqn_param will take effect. Currently, 'sqn' support hetero mode only. |

'rmsprop' |

batch_size |

int, default: -1 |

Batch size when updating model. -1 means use all data in a batch. i.e. Not to use mini-batch strategy. |

-1 |

learning_rate |

float, default: 0.01 |

Learning rate |

0.01 |

max_iter |

int, default: 100 |

The maximum iteration for training. |

100 |

early_stop |

{'diff', 'weight_diff', 'abs'}, default: 'diff' |

Method used to judge converge or not. a) diff: Use difference of loss between two iterations to judge whether converge. b) weight_diff: Use difference between weights of two consecutive iterations c) abs: Use the absolute value of loss to judge whether converge. i.e. if loss < eps, it is converged. |

'diff' |

decay |

int or float, default: 1 |

Decay rate for learning rate. learning rate will follow the following decay schedule. lr = lr0/(1+decay*t) if decay_sqrt is False. If decay_sqrt is True, lr = lr0 / sqrt(1+decay*t) where t is the iter number. |

1 |

decay_sqrt |

bool, default: True |

lr = lr0/(1+decay*t) if decay_sqrt is False, otherwise, lr = lr0 / sqrt(1+decay*t) |

True |

encrypt_param |

EncryptParam object, default: default EncryptParam object |

encrypt param |

<federatedml.param.encrypt_param.EncryptParam object at 0x7f3f8a68e590> |

predict_param |

PredictParam object, default: default PredictParam object |

predict param |

<federatedml.param.predict_param.PredictParam object at 0x7f3f8a68e5d0> |

callback_param |

CallbackParam object |

callback param |

<federatedml.param.callback_param.CallbackParam object at 0x7f3f8a68e690> |

cv_param |

CrossValidationParam object, default: default CrossValidationParam object |

cv param |

<federatedml.param.cross_validation_param.CrossValidationParam object at 0x7f3f8a68e490> |

multi_class |

{'ovr'}, default: 'ovr' |

If it is a multi_class task, indicate what strategy to use. Currently, support 'ovr' short for one_vs_rest only. |

'ovr' |

validation_freqs |

int or list or tuple or set, or None, default None |

validation frequency during training. |

None |

early_stopping_rounds |

int, default: None |

Will stop training if one metric doesn’t improve in last early_stopping_round rounds |

None |

metrics |

list or None, default: None |

Indicate when executing evaluation during train process, which metrics will be used. If set as empty, default metrics for specific task type will be used. As for binary classification, default metrics are ['auc', 'ks'] |

None |

use_first_metric_only |

bool, default: False |

Indicate whether use the first metric only for early stopping judgement. |

False |

floating_point_precision |

None or integer |

if not None, use floating_point_precision-bit to speed up calculation, e.g.: convert an x to round(x * 2**floating_point_precision) during Paillier operation, divide the result by 2**floating_point_precision in the end. |

23 |

Source code in federatedml/param/logistic_regression_param.py

class LogisticParam(BaseParam):

"""

Parameters used for Logistic Regression both for Homo mode or Hetero mode.

Parameters

----------

penalty : {'L2', 'L1' or None}

Penalty method used in LR. Please note that, when using encrypted version in HomoLR,

'L1' is not supported.

tol : float, default: 1e-4

The tolerance of convergence

alpha : float, default: 1.0

Regularization strength coefficient.

optimizer : {'rmsprop', 'sgd', 'adam', 'nesterov_momentum_sgd', 'sqn', 'adagrad'}, default: 'rmsprop'

Optimize method, if 'sqn' has been set, sqn_param will take effect. Currently, 'sqn' support hetero mode only.

batch_size : int, default: -1

Batch size when updating model. -1 means use all data in a batch. i.e. Not to use mini-batch strategy.

learning_rate : float, default: 0.01

Learning rate

max_iter : int, default: 100

The maximum iteration for training.

early_stop : {'diff', 'weight_diff', 'abs'}, default: 'diff'

Method used to judge converge or not.

a) diff: Use difference of loss between two iterations to judge whether converge.

b) weight_diff: Use difference between weights of two consecutive iterations

c) abs: Use the absolute value of loss to judge whether converge. i.e. if loss < eps, it is converged.

Please note that for hetero-lr multi-host situation, this parameter support "weight_diff" only.

decay: int or float, default: 1

Decay rate for learning rate. learning rate will follow the following decay schedule.

lr = lr0/(1+decay*t) if decay_sqrt is False. If decay_sqrt is True, lr = lr0 / sqrt(1+decay*t)

where t is the iter number.

decay_sqrt: bool, default: True

lr = lr0/(1+decay*t) if decay_sqrt is False, otherwise, lr = lr0 / sqrt(1+decay*t)

encrypt_param: EncryptParam object, default: default EncryptParam object

encrypt param

predict_param: PredictParam object, default: default PredictParam object

predict param

callback_param: CallbackParam object

callback param

cv_param: CrossValidationParam object, default: default CrossValidationParam object

cv param

multi_class: {'ovr'}, default: 'ovr'

If it is a multi_class task, indicate what strategy to use. Currently, support 'ovr' short for one_vs_rest only.

validation_freqs: int or list or tuple or set, or None, default None

validation frequency during training.

early_stopping_rounds: int, default: None

Will stop training if one metric doesn’t improve in last early_stopping_round rounds

metrics: list or None, default: None

Indicate when executing evaluation during train process, which metrics will be used. If set as empty,

default metrics for specific task type will be used. As for binary classification, default metrics are

['auc', 'ks']

use_first_metric_only: bool, default: False

Indicate whether use the first metric only for early stopping judgement.

floating_point_precision: None or integer

if not None, use floating_point_precision-bit to speed up calculation,

e.g.: convert an x to round(x * 2**floating_point_precision) during Paillier operation, divide

the result by 2**floating_point_precision in the end.

"""

def __init__(self, penalty='L2',

tol=1e-4, alpha=1.0, optimizer='rmsprop',

batch_size=-1, learning_rate=0.01, init_param=InitParam(),

max_iter=100, early_stop='diff', encrypt_param=EncryptParam(),

predict_param=PredictParam(), cv_param=CrossValidationParam(),

decay=1, decay_sqrt=True,

multi_class='ovr', validation_freqs=None, early_stopping_rounds=None,

stepwise_param=StepwiseParam(), floating_point_precision=23,

metrics=None,

use_first_metric_only=False,

callback_param=CallbackParam()

):

super(LogisticParam, self).__init__()

self.penalty = penalty

self.tol = tol

self.alpha = alpha

self.optimizer = optimizer

self.batch_size = batch_size

self.learning_rate = learning_rate

self.init_param = copy.deepcopy(init_param)

self.max_iter = max_iter

self.early_stop = early_stop

self.encrypt_param = encrypt_param

self.predict_param = copy.deepcopy(predict_param)

self.cv_param = copy.deepcopy(cv_param)

self.decay = decay

self.decay_sqrt = decay_sqrt

self.multi_class = multi_class

self.validation_freqs = validation_freqs

self.stepwise_param = copy.deepcopy(stepwise_param)

self.early_stopping_rounds = early_stopping_rounds

self.metrics = metrics or []

self.use_first_metric_only = use_first_metric_only

self.floating_point_precision = floating_point_precision

self.callback_param = copy.deepcopy(callback_param)

def check(self):

descr = "logistic_param's"

if self.penalty is None:

pass

elif type(self.penalty).__name__ != "str":

raise ValueError(

"logistic_param's penalty {} not supported, should be str type".format(self.penalty))

else:

self.penalty = self.penalty.upper()

if self.penalty not in [consts.L1_PENALTY, consts.L2_PENALTY, 'NONE']:

raise ValueError(

"logistic_param's penalty not supported, penalty should be 'L1', 'L2' or 'none'")

if not isinstance(self.tol, (int, float)):

raise ValueError(

"logistic_param's tol {} not supported, should be float type".format(self.tol))

if type(self.alpha).__name__ not in ["float", 'int']:

raise ValueError(

"logistic_param's alpha {} not supported, should be float or int type".format(self.alpha))

if type(self.optimizer).__name__ != "str":

raise ValueError(

"logistic_param's optimizer {} not supported, should be str type".format(self.optimizer))

else:

self.optimizer = self.optimizer.lower()

if self.optimizer not in ['sgd', 'rmsprop', 'adam', 'adagrad', 'nesterov_momentum_sgd', 'sqn']:

raise ValueError(

"logistic_param's optimizer not supported, optimizer should be"

" 'sgd', 'rmsprop', 'adam', 'nesterov_momentum_sgd', 'sqn' or 'adagrad'")

if self.batch_size != -1:

if type(self.batch_size).__name__ not in ["int"] \

or self.batch_size < consts.MIN_BATCH_SIZE:

raise ValueError(descr + " {} not supported, should be larger than {} or "

"-1 represent for all data".format(self.batch_size, consts.MIN_BATCH_SIZE))

if not isinstance(self.learning_rate, (float, int)):

raise ValueError(

"logistic_param's learning_rate {} not supported, should be float or int type".format(

self.learning_rate))

self.init_param.check()

if type(self.max_iter).__name__ != "int":

raise ValueError(

"logistic_param's max_iter {} not supported, should be int type".format(self.max_iter))

elif self.max_iter <= 0:

raise ValueError(

"logistic_param's max_iter must be greater or equal to 1")

if type(self.early_stop).__name__ != "str":

raise ValueError(

"logistic_param's early_stop {} not supported, should be str type".format(

self.early_stop))

else:

self.early_stop = self.early_stop.lower()

if self.early_stop not in ['diff', 'abs', 'weight_diff']:

raise ValueError(

"logistic_param's early_stop not supported, converge_func should be"

" 'diff', 'weight_diff' or 'abs'")

self.encrypt_param.check()

self.predict_param.check()

if self.encrypt_param.method not in [consts.PAILLIER, None]:

raise ValueError(

"logistic_param's encrypted method support 'Paillier' or None only")

if type(self.decay).__name__ not in ["int", 'float']:

raise ValueError(

"logistic_param's decay {} not supported, should be 'int' or 'float'".format(

self.decay))

if type(self.decay_sqrt).__name__ not in ['bool']:

raise ValueError(

"logistic_param's decay_sqrt {} not supported, should be 'bool'".format(

self.decay_sqrt))

self.stepwise_param.check()

if self.early_stopping_rounds is None:

pass

elif isinstance(self.early_stopping_rounds, int):

if self.early_stopping_rounds < 1:

raise ValueError("early stopping rounds should be larger than 0 when it's integer")

if self.validation_freqs is None:

raise ValueError("validation freqs must be set when early stopping is enabled")

if self.metrics is not None and not isinstance(self.metrics, list):

raise ValueError("metrics should be a list")

if not isinstance(self.use_first_metric_only, bool):

raise ValueError("use_first_metric_only should be a boolean")

if self.floating_point_precision is not None and \

(not isinstance(self.floating_point_precision, int) or \

self.floating_point_precision < 0 or self.floating_point_precision > 63):

raise ValueError("floating point precision should be null or a integer between 0 and 63")

for p in ["early_stopping_rounds", "validation_freqs", "metrics",

"use_first_metric_only"]:

# if self._warn_to_deprecate_param(p, "", ""):

if self._deprecated_params_set.get(p):

if "callback_param" in self.get_user_feeded():

raise ValueError(f"{p} and callback param should not be set simultaneously,"

f"{self._deprecated_params_set}, {self.get_user_feeded()}")

else:

self.callback_param.callbacks = ["PerformanceEvaluate"]

break

if self._warn_to_deprecate_param("validation_freqs", descr, "callback_param's 'validation_freqs'"):

self.callback_param.validation_freqs = self.validation_freqs

if self._warn_to_deprecate_param("early_stopping_rounds", descr, "callback_param's 'early_stopping_rounds'"):

self.callback_param.early_stopping_rounds = self.early_stopping_rounds

if self._warn_to_deprecate_param("metrics", descr, "callback_param's 'metrics'"):

self.callback_param.metrics = self.metrics

if self._warn_to_deprecate_param("use_first_metric_only", descr, "callback_param's 'use_first_metric_only'"):

self.callback_param.use_first_metric_only = self.use_first_metric_only

return True

__init__(self, penalty='L2', tol=0.0001, alpha=1.0, optimizer='rmsprop', batch_size=-1, learning_rate=0.01, init_param=<federatedml.param.init_model_param.InitParam object at 0x7f3f8a68e450>, max_iter=100, early_stop='diff', encrypt_param=<federatedml.param.encrypt_param.EncryptParam object at 0x7f3f8a68e590>, predict_param=<federatedml.param.predict_param.PredictParam object at 0x7f3f8a68e5d0>, cv_param=<federatedml.param.cross_validation_param.CrossValidationParam object at 0x7f3f8a68e490>, decay=1, decay_sqrt=True, multi_class='ovr', validation_freqs=None, early_stopping_rounds=None, stepwise_param=<federatedml.param.stepwise_param.StepwiseParam object at 0x7f3f8a68e6d0>, floating_point_precision=23, metrics=None, use_first_metric_only=False, callback_param=<federatedml.param.callback_param.CallbackParam object at 0x7f3f8a68e690>)

special

¶Source code in federatedml/param/logistic_regression_param.py

def __init__(self, penalty='L2',

tol=1e-4, alpha=1.0, optimizer='rmsprop',

batch_size=-1, learning_rate=0.01, init_param=InitParam(),

max_iter=100, early_stop='diff', encrypt_param=EncryptParam(),

predict_param=PredictParam(), cv_param=CrossValidationParam(),

decay=1, decay_sqrt=True,

multi_class='ovr', validation_freqs=None, early_stopping_rounds=None,

stepwise_param=StepwiseParam(), floating_point_precision=23,

metrics=None,

use_first_metric_only=False,

callback_param=CallbackParam()

):

super(LogisticParam, self).__init__()

self.penalty = penalty

self.tol = tol

self.alpha = alpha

self.optimizer = optimizer

self.batch_size = batch_size

self.learning_rate = learning_rate

self.init_param = copy.deepcopy(init_param)

self.max_iter = max_iter

self.early_stop = early_stop

self.encrypt_param = encrypt_param

self.predict_param = copy.deepcopy(predict_param)

self.cv_param = copy.deepcopy(cv_param)

self.decay = decay

self.decay_sqrt = decay_sqrt

self.multi_class = multi_class

self.validation_freqs = validation_freqs

self.stepwise_param = copy.deepcopy(stepwise_param)

self.early_stopping_rounds = early_stopping_rounds

self.metrics = metrics or []

self.use_first_metric_only = use_first_metric_only

self.floating_point_precision = floating_point_precision

self.callback_param = copy.deepcopy(callback_param)

check(self)

¶Source code in federatedml/param/logistic_regression_param.py

def check(self):

descr = "logistic_param's"

if self.penalty is None:

pass

elif type(self.penalty).__name__ != "str":

raise ValueError(

"logistic_param's penalty {} not supported, should be str type".format(self.penalty))

else:

self.penalty = self.penalty.upper()

if self.penalty not in [consts.L1_PENALTY, consts.L2_PENALTY, 'NONE']:

raise ValueError(

"logistic_param's penalty not supported, penalty should be 'L1', 'L2' or 'none'")

if not isinstance(self.tol, (int, float)):

raise ValueError(

"logistic_param's tol {} not supported, should be float type".format(self.tol))

if type(self.alpha).__name__ not in ["float", 'int']:

raise ValueError(

"logistic_param's alpha {} not supported, should be float or int type".format(self.alpha))

if type(self.optimizer).__name__ != "str":

raise ValueError(

"logistic_param's optimizer {} not supported, should be str type".format(self.optimizer))

else:

self.optimizer = self.optimizer.lower()

if self.optimizer not in ['sgd', 'rmsprop', 'adam', 'adagrad', 'nesterov_momentum_sgd', 'sqn']:

raise ValueError(

"logistic_param's optimizer not supported, optimizer should be"

" 'sgd', 'rmsprop', 'adam', 'nesterov_momentum_sgd', 'sqn' or 'adagrad'")

if self.batch_size != -1:

if type(self.batch_size).__name__ not in ["int"] \

or self.batch_size < consts.MIN_BATCH_SIZE:

raise ValueError(descr + " {} not supported, should be larger than {} or "

"-1 represent for all data".format(self.batch_size, consts.MIN_BATCH_SIZE))

if not isinstance(self.learning_rate, (float, int)):

raise ValueError(

"logistic_param's learning_rate {} not supported, should be float or int type".format(

self.learning_rate))

self.init_param.check()

if type(self.max_iter).__name__ != "int":

raise ValueError(

"logistic_param's max_iter {} not supported, should be int type".format(self.max_iter))

elif self.max_iter <= 0:

raise ValueError(

"logistic_param's max_iter must be greater or equal to 1")

if type(self.early_stop).__name__ != "str":

raise ValueError(

"logistic_param's early_stop {} not supported, should be str type".format(

self.early_stop))

else:

self.early_stop = self.early_stop.lower()

if self.early_stop not in ['diff', 'abs', 'weight_diff']:

raise ValueError(

"logistic_param's early_stop not supported, converge_func should be"

" 'diff', 'weight_diff' or 'abs'")

self.encrypt_param.check()

self.predict_param.check()

if self.encrypt_param.method not in [consts.PAILLIER, None]:

raise ValueError(

"logistic_param's encrypted method support 'Paillier' or None only")

if type(self.decay).__name__ not in ["int", 'float']:

raise ValueError(

"logistic_param's decay {} not supported, should be 'int' or 'float'".format(

self.decay))

if type(self.decay_sqrt).__name__ not in ['bool']:

raise ValueError(

"logistic_param's decay_sqrt {} not supported, should be 'bool'".format(

self.decay_sqrt))

self.stepwise_param.check()

if self.early_stopping_rounds is None:

pass

elif isinstance(self.early_stopping_rounds, int):

if self.early_stopping_rounds < 1:

raise ValueError("early stopping rounds should be larger than 0 when it's integer")

if self.validation_freqs is None:

raise ValueError("validation freqs must be set when early stopping is enabled")

if self.metrics is not None and not isinstance(self.metrics, list):

raise ValueError("metrics should be a list")

if not isinstance(self.use_first_metric_only, bool):

raise ValueError("use_first_metric_only should be a boolean")

if self.floating_point_precision is not None and \

(not isinstance(self.floating_point_precision, int) or \

self.floating_point_precision < 0 or self.floating_point_precision > 63):

raise ValueError("floating point precision should be null or a integer between 0 and 63")

for p in ["early_stopping_rounds", "validation_freqs", "metrics",

"use_first_metric_only"]:

# if self._warn_to_deprecate_param(p, "", ""):

if self._deprecated_params_set.get(p):

if "callback_param" in self.get_user_feeded():

raise ValueError(f"{p} and callback param should not be set simultaneously,"

f"{self._deprecated_params_set}, {self.get_user_feeded()}")

else:

self.callback_param.callbacks = ["PerformanceEvaluate"]

break

if self._warn_to_deprecate_param("validation_freqs", descr, "callback_param's 'validation_freqs'"):

self.callback_param.validation_freqs = self.validation_freqs

if self._warn_to_deprecate_param("early_stopping_rounds", descr, "callback_param's 'early_stopping_rounds'"):

self.callback_param.early_stopping_rounds = self.early_stopping_rounds

if self._warn_to_deprecate_param("metrics", descr, "callback_param's 'metrics'"):

self.callback_param.metrics = self.metrics

if self._warn_to_deprecate_param("use_first_metric_only", descr, "callback_param's 'use_first_metric_only'"):

self.callback_param.use_first_metric_only = self.use_first_metric_only

return True

HomoLogisticParam (LogisticParam)

¶

Parameters:

| Name | Type | Description | Default |

|---|---|---|---|

re_encrypt_batches |

int, default: 2 |

Required when using encrypted version HomoLR. Since multiple batch updating coefficient may cause overflow error. The model need to be re-encrypt for every several batches. Please be careful when setting this parameter. Too large batches may cause training failure. |

2 |

aggregate_iters |

int, default: 1 |

Indicate how many iterations are aggregated once. |

1 |

use_proximal |

bool, default: False |

Whether to turn on additional proximial term. For more details of FedProx, Please refer to https://arxiv.org/abs/1812.06127 |

False |

mu |

float, default 0.1 |

To scale the proximal term |

0.1 |

Source code in federatedml/param/logistic_regression_param.py

class HomoLogisticParam(LogisticParam):

"""

Parameters

----------

re_encrypt_batches : int, default: 2

Required when using encrypted version HomoLR. Since multiple batch updating coefficient may cause

overflow error. The model need to be re-encrypt for every several batches. Please be careful when setting

this parameter. Too large batches may cause training failure.

aggregate_iters : int, default: 1

Indicate how many iterations are aggregated once.

use_proximal: bool, default: False

Whether to turn on additional proximial term. For more details of FedProx, Please refer to

https://arxiv.org/abs/1812.06127

mu: float, default 0.1

To scale the proximal term

"""

def __init__(self, penalty='L2',

tol=1e-4, alpha=1.0, optimizer='rmsprop',

batch_size=-1, learning_rate=0.01, init_param=InitParam(),

max_iter=100, early_stop='diff',

encrypt_param=EncryptParam(method=None), re_encrypt_batches=2,

predict_param=PredictParam(), cv_param=CrossValidationParam(),

decay=1, decay_sqrt=True,

aggregate_iters=1, multi_class='ovr', validation_freqs=None,

early_stopping_rounds=None,

metrics=['auc', 'ks'],

use_first_metric_only=False,

use_proximal=False,

mu=0.1, callback_param=CallbackParam()

):

super(HomoLogisticParam, self).__init__(penalty=penalty, tol=tol, alpha=alpha, optimizer=optimizer,

batch_size=batch_size,

learning_rate=learning_rate,

init_param=init_param, max_iter=max_iter, early_stop=early_stop,

encrypt_param=encrypt_param, predict_param=predict_param,

cv_param=cv_param, multi_class=multi_class,

validation_freqs=validation_freqs,

decay=decay, decay_sqrt=decay_sqrt,

early_stopping_rounds=early_stopping_rounds,

metrics=metrics, use_first_metric_only=use_first_metric_only,

callback_param=callback_param)

self.re_encrypt_batches = re_encrypt_batches

self.aggregate_iters = aggregate_iters

self.use_proximal = use_proximal

self.mu = mu

def check(self):

super().check()

if type(self.re_encrypt_batches).__name__ != "int":

raise ValueError(

"logistic_param's re_encrypt_batches {} not supported, should be int type".format(

self.re_encrypt_batches))

elif self.re_encrypt_batches < 0:

raise ValueError(

"logistic_param's re_encrypt_batches must be greater or equal to 0")

if not isinstance(self.aggregate_iters, int):

raise ValueError(

"logistic_param's aggregate_iters {} not supported, should be int type".format(

self.aggregate_iters))

if self.encrypt_param.method == consts.PAILLIER:

if self.optimizer != 'sgd':

raise ValueError("Paillier encryption mode supports 'sgd' optimizer method only.")

if self.penalty == consts.L1_PENALTY:

raise ValueError("Paillier encryption mode supports 'L2' penalty or None only.")

if self.optimizer == 'sqn':

raise ValueError("'sqn' optimizer is supported for hetero mode only.")

return True

__init__(self, penalty='L2', tol=0.0001, alpha=1.0, optimizer='rmsprop', batch_size=-1, learning_rate=0.01, init_param=<federatedml.param.init_model_param.InitParam object at 0x7f3f8a68e550>, max_iter=100, early_stop='diff', encrypt_param=<federatedml.param.encrypt_param.EncryptParam object at 0x7f3f8a68e710>, re_encrypt_batches=2, predict_param=<federatedml.param.predict_param.PredictParam object at 0x7f3f8a68e790>, cv_param=<federatedml.param.cross_validation_param.CrossValidationParam object at 0x7f3f8a68e750>, decay=1, decay_sqrt=True, aggregate_iters=1, multi_class='ovr', validation_freqs=None, early_stopping_rounds=None, metrics=['auc', 'ks'], use_first_metric_only=False, use_proximal=False, mu=0.1, callback_param=<federatedml.param.callback_param.CallbackParam object at 0x7f3f8a68e610>)

special

¶Source code in federatedml/param/logistic_regression_param.py

def __init__(self, penalty='L2',

tol=1e-4, alpha=1.0, optimizer='rmsprop',

batch_size=-1, learning_rate=0.01, init_param=InitParam(),

max_iter=100, early_stop='diff',

encrypt_param=EncryptParam(method=None), re_encrypt_batches=2,

predict_param=PredictParam(), cv_param=CrossValidationParam(),

decay=1, decay_sqrt=True,

aggregate_iters=1, multi_class='ovr', validation_freqs=None,

early_stopping_rounds=None,

metrics=['auc', 'ks'],

use_first_metric_only=False,

use_proximal=False,

mu=0.1, callback_param=CallbackParam()

):

super(HomoLogisticParam, self).__init__(penalty=penalty, tol=tol, alpha=alpha, optimizer=optimizer,

batch_size=batch_size,

learning_rate=learning_rate,

init_param=init_param, max_iter=max_iter, early_stop=early_stop,

encrypt_param=encrypt_param, predict_param=predict_param,

cv_param=cv_param, multi_class=multi_class,

validation_freqs=validation_freqs,

decay=decay, decay_sqrt=decay_sqrt,

early_stopping_rounds=early_stopping_rounds,

metrics=metrics, use_first_metric_only=use_first_metric_only,

callback_param=callback_param)

self.re_encrypt_batches = re_encrypt_batches

self.aggregate_iters = aggregate_iters

self.use_proximal = use_proximal

self.mu = mu

check(self)

¶Source code in federatedml/param/logistic_regression_param.py

def check(self):

super().check()

if type(self.re_encrypt_batches).__name__ != "int":

raise ValueError(

"logistic_param's re_encrypt_batches {} not supported, should be int type".format(

self.re_encrypt_batches))

elif self.re_encrypt_batches < 0:

raise ValueError(

"logistic_param's re_encrypt_batches must be greater or equal to 0")

if not isinstance(self.aggregate_iters, int):

raise ValueError(

"logistic_param's aggregate_iters {} not supported, should be int type".format(

self.aggregate_iters))

if self.encrypt_param.method == consts.PAILLIER:

if self.optimizer != 'sgd':

raise ValueError("Paillier encryption mode supports 'sgd' optimizer method only.")

if self.penalty == consts.L1_PENALTY:

raise ValueError("Paillier encryption mode supports 'L2' penalty or None only.")

if self.optimizer == 'sqn':

raise ValueError("'sqn' optimizer is supported for hetero mode only.")

return True

HeteroLogisticParam (LogisticParam)

¶

Source code in federatedml/param/logistic_regression_param.py

class HeteroLogisticParam(LogisticParam):

def __init__(self, penalty='L2',

tol=1e-4, alpha=1.0, optimizer='rmsprop',

batch_size=-1, learning_rate=0.01, init_param=InitParam(),

max_iter=100, early_stop='diff',

encrypted_mode_calculator_param=EncryptedModeCalculatorParam(),

predict_param=PredictParam(), cv_param=CrossValidationParam(),

decay=1, decay_sqrt=True, sqn_param=StochasticQuasiNewtonParam(),

multi_class='ovr', validation_freqs=None, early_stopping_rounds=None,

metrics=['auc', 'ks'], floating_point_precision=23,

encrypt_param=EncryptParam(),

use_first_metric_only=False, stepwise_param=StepwiseParam(),

callback_param=CallbackParam()

):

super(HeteroLogisticParam, self).__init__(penalty=penalty, tol=tol, alpha=alpha, optimizer=optimizer,

batch_size=batch_size,

learning_rate=learning_rate,

init_param=init_param, max_iter=max_iter, early_stop=early_stop,

predict_param=predict_param, cv_param=cv_param,

decay=decay,

decay_sqrt=decay_sqrt, multi_class=multi_class,

validation_freqs=validation_freqs,

early_stopping_rounds=early_stopping_rounds,

metrics=metrics, floating_point_precision=floating_point_precision,

encrypt_param=encrypt_param,

use_first_metric_only=use_first_metric_only,

stepwise_param=stepwise_param,

callback_param=callback_param)

self.encrypted_mode_calculator_param = copy.deepcopy(encrypted_mode_calculator_param)

self.sqn_param = copy.deepcopy(sqn_param)

def check(self):

super().check()

self.encrypted_mode_calculator_param.check()

self.sqn_param.check()

return True

__init__(self, penalty='L2', tol=0.0001, alpha=1.0, optimizer='rmsprop', batch_size=-1, learning_rate=0.01, init_param=<federatedml.param.init_model_param.InitParam object at 0x7f3f8a68e850>, max_iter=100, early_stop='diff', encrypted_mode_calculator_param=<federatedml.param.encrypted_mode_calculation_param.EncryptedModeCalculatorParam object at 0x7f3f8a68e810>, predict_param=<federatedml.param.predict_param.PredictParam object at 0x7f3f8a68e910>, cv_param=<federatedml.param.cross_validation_param.CrossValidationParam object at 0x7f3f8a68e890>, decay=1, decay_sqrt=True, sqn_param=<federatedml.param.sqn_param.StochasticQuasiNewtonParam object at 0x7f3f8a68ea10>, multi_class='ovr', validation_freqs=None, early_stopping_rounds=None, metrics=['auc', 'ks'], floating_point_precision=23, encrypt_param=<federatedml.param.encrypt_param.EncryptParam object at 0x7f3f8a68e9d0>, use_first_metric_only=False, stepwise_param=<federatedml.param.stepwise_param.StepwiseParam object at 0x7f3f8a68ea50>, callback_param=<federatedml.param.callback_param.CallbackParam object at 0x7f3f8a68eb10>)

special

¶Source code in federatedml/param/logistic_regression_param.py

def __init__(self, penalty='L2',

tol=1e-4, alpha=1.0, optimizer='rmsprop',

batch_size=-1, learning_rate=0.01, init_param=InitParam(),

max_iter=100, early_stop='diff',

encrypted_mode_calculator_param=EncryptedModeCalculatorParam(),

predict_param=PredictParam(), cv_param=CrossValidationParam(),

decay=1, decay_sqrt=True, sqn_param=StochasticQuasiNewtonParam(),

multi_class='ovr', validation_freqs=None, early_stopping_rounds=None,

metrics=['auc', 'ks'], floating_point_precision=23,

encrypt_param=EncryptParam(),

use_first_metric_only=False, stepwise_param=StepwiseParam(),

callback_param=CallbackParam()

):

super(HeteroLogisticParam, self).__init__(penalty=penalty, tol=tol, alpha=alpha, optimizer=optimizer,

batch_size=batch_size,

learning_rate=learning_rate,

init_param=init_param, max_iter=max_iter, early_stop=early_stop,

predict_param=predict_param, cv_param=cv_param,

decay=decay,

decay_sqrt=decay_sqrt, multi_class=multi_class,

validation_freqs=validation_freqs,

early_stopping_rounds=early_stopping_rounds,

metrics=metrics, floating_point_precision=floating_point_precision,

encrypt_param=encrypt_param,

use_first_metric_only=use_first_metric_only,

stepwise_param=stepwise_param,

callback_param=callback_param)

self.encrypted_mode_calculator_param = copy.deepcopy(encrypted_mode_calculator_param)

self.sqn_param = copy.deepcopy(sqn_param)

check(self)

¶Source code in federatedml/param/logistic_regression_param.py

def check(self):

super().check()

self.encrypted_mode_calculator_param.check()

self.sqn_param.check()

return True

Features¶

- Both Homo-LR and Hetero-LR

L1 & L2 regularization

Mini-batch mechanism

Weighted training

Six optimization method:

sgd

gradient descent with arbitrary batch sizermsprop

RMSPropadam

Adamadagrad

AdaGradnesterov_momentum_sgd

Nesterov Momentumsqn

stochastic quansi-newton. More details is available in this A Quasi-Newton Method Based Vertical Federated Learning Framework for Logistic Regression.Three converge criteria:

diff

Use difference of loss between two iterations, not available for multi-host training;abs

use the absolute value of loss;weight_diff

use difference of model weightsSupport multi-host modeling task.

Support validation for every arbitrary iterations

Learning rate decay mechanism

- Homo-LR extra features

Two Encryption mode

"Paillier" mode

Host will not get clear text model. When using encryption mode, "sgd" optimizer is supported only.Non-encryption mode

Everything is in clear text.Secure aggregation mechanism used when more aggregating models

Support aggregate for every arbitrary iterations.

Support FedProx mechanism. More details is available in this Federated Optimization in Heterogeneous Networks.

- Hetero-LR extra features

- Support different encrypt-mode to balance speed and security

- Support OneVeRest

- When modeling a multi-host task, "weight_diff" converge criteria is supported only.

- Support sparse format data

- Support early-stopping mechanism

- Support setting arbitrary metrics for validation during training

- Support stepwise. For details on stepwise mode, please refer stepwise.

- Hetero-SSHE-LR extra features

- Support different encrypt-mode to balance speed and security

- Support OneVeRest

- Support early-stopping mechanism

- Support setting arbitrary metrics for validation during training

- Support model encryption with host model