Homogeneous Neural Networks¶

Neural networks are probably the most popular machine learning algorithms in recent years. FATE provides a federated homogeneous neural network implementation. We simplified the federation process into three parties. Party A represents Guest,which acts as a task trigger. Party B represents Host, which is almost the same with guest except that Host does not initiate task. Party C serves as a coordinator to aggregate models from guest/hosts and broadcast aggregated model.

Basic Process¶

As its name suggested, in Homogeneous Neural Networks, the feature spaces of guest and hosts are identical. An optional encryption mode for model is provided. By doing this, no party can get the private model of other parties.

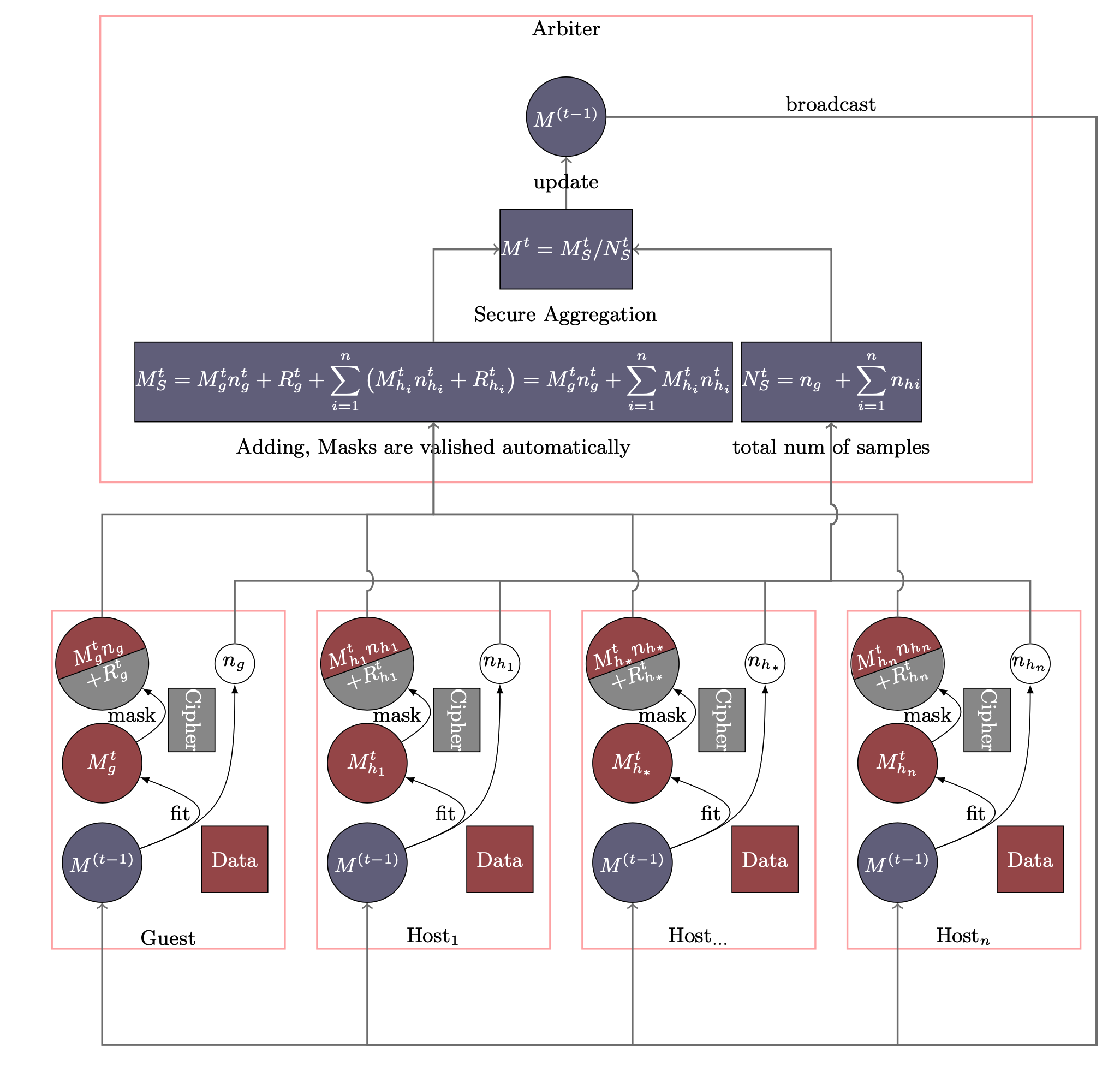

The Homo NN process is shown in Figure 1. Models of Party A and Party B have the same neural networks structure. In each iteration, each party trains its model on its own data. After that, all parties upload their encrypted (with random mask) model parameters to arbiter. The arbiter aggregates these parameters to form a federated model parameter, which will then be distributed to all parties for updating their local models. Similar to traditional neural network, the training process will stop when the federated model converges or the whole training process reaches a predefined max-iteration threshold.

Please note that random numbers are carefully generated so that the random numbers of all parties add up an zero matrix and thus disappear automatically. For more detailed explanations, please refer to Secure Analytics: Federated Learning and Secure Aggregation. Since there is no model transferred in plaintext, except for the owner of the model, no other party can obtain the real information of the model.

Param¶

homo_nn_param

¶

Classes¶

TrainerParam(trainer_name=None, **kwargs)

¶

Bases: BaseParam

Source code in federatedml/param/homo_nn_param.py

6 7 8 9 | |

Attributes¶

trainer_name = trainer_name

instance-attribute

¶param = kwargs

instance-attribute

¶Functions¶

check()

¶Source code in federatedml/param/homo_nn_param.py

11 12 13 | |

to_dict()

¶Source code in federatedml/param/homo_nn_param.py

15 16 17 | |

DatasetParam(dataset_name=None, **kwargs)

¶

Bases: BaseParam

Source code in federatedml/param/homo_nn_param.py

22 23 24 25 | |

Attributes¶

dataset_name = dataset_name

instance-attribute

¶param = kwargs

instance-attribute

¶Functions¶

check()

¶Source code in federatedml/param/homo_nn_param.py

27 28 29 | |

to_dict()

¶Source code in federatedml/param/homo_nn_param.py

31 32 33 | |

HomoNNParam(trainer=TrainerParam(), dataset=DatasetParam(), torch_seed=100, nn_define=None, loss=None, optimizer=None)

¶

Bases: BaseParam

Source code in federatedml/param/homo_nn_param.py

38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 | |

Attributes¶

trainer = trainer

instance-attribute

¶dataset = dataset

instance-attribute

¶torch_seed = torch_seed

instance-attribute

¶nn_define = nn_define

instance-attribute

¶loss = loss

instance-attribute

¶optimizer = optimizer

instance-attribute

¶Functions¶

check()

¶Source code in federatedml/param/homo_nn_param.py

55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 | |

Features¶

tensorflow backend¶

supported layers¶

{

"layer": "Dense",

"units": ,

"activation": null,

"use_bias": true,

"kernel_initializer": "glorot_uniform",

"bias_initializer": "zeros",

"kernel_regularizer": null,

"bias_regularizer": null,

"activity_regularizer": null,

"kernel_constraint": null,

"bias_constraint": null

}

{

"rate": ,

"noise_shape": null,

"seed": null

}

other layers listed in tf.keras.layers will be supported in near feature.

supported optimizer¶

all optimizer listed in tf.keras.optimizers supported

{

"optimizer": "Adadelta",

"learning_rate": 0.001,

"rho": 0.95,

"epsilon": 1e-07

}

{

"optimizer": "Adagrad",

"learning_rate": 0.001,

"initial_accumulator_value": 0.1,

"epsilon": 1e-07

}

{

"optimizer": "Adam",

"learning_rate": 0.001,

"beta_1": 0.9,

"beta_2": 0.999,

"amsgrad": false,

"epsilon": 1e-07

}

{

"optimizer": "Ftrl",

"learning_rate": 0.001,

"learning_rate_power": -0.5,

"initial_accumulator_value": 0.1,

"l1_regularization_strength": 0.0,

"l2_regularization_strength": 0.0,

"l2_shrinkage_regularization_strength": 0.0

}

{

"optimizer": "Nadam",

"learning_rate": 0.001,

"beta_1": 0.9,

"beta_2": 0.999,

"epsilon": 1e-07

}

{

"optimizer": "RMSprop",

"learning_rate": 0.001,

"pho": 0.9,

"momentum": 0.0,

"epsilon": 1e-07,

"centered": false

}

{

"optimizer": "SGD",

"learning_rate": 0.001,

"momentum": 0.0,

"nesterov": false

}

supported losses¶

all losses listed in tf.keras.losses supported

- binary_crossentropy

- categorical_crossentropy

- categorical_hinge

- cosine_similarity

- hinge

- kullback_leibler_divergence

- logcosh

- mean_absolute_error

- mean_absolute_percentage_error

- mean_squared_error

- mean_squared_logarithmic_error

- poisson

- sparse_categorical_crossentropy

- squared_hinge

support multi-host¶

In fact, for model security reasons, at least two host parties are required.

pytorch backend¶

There are some difference in nn configuration build by pytorch compared to tf or keras.

-

config_type

pytorch, if use pytorch to build your model -

nn_define

Each layer is represented as an object in json.

supported layers¶

Linear

{

"layer": "Linear",

"name": #string,

"type": "normal",

"config": [input_num,output_num]

}

other normal layers

-

BatchNorm2d

-

dropout

supportd activate¶

Rulu

{ "layer": "Relu", "type": "activate", "name": #string }

-

other activate layers

-

Selu

- LeakyReLU

- Tanh

- Sigmoid

- Relu

- Tanh

supported optimizer¶

A json object is needed

Adam

"optimizer": {

"optimizer": "Adam",

"learning_rate": 0.05

}

optimizer include "Adam","SGD","RMSprop","Adagrad"

supported loss¶

A string is needed, supported losses include:

- "CrossEntropyLoss"

- "MSELoss"

- "BCELoss"

- "BCEWithLogitsLoss"

- "NLLLoss"

- "L1Loss"

- "SmoothL1Loss"

- "HingeEmbeddingLoss"

supported metrics¶

A string is needed, supported metrics include:

- auccuray

- precision

- recall

- auc

- f1

- fbeta

Use¶

Since all parties training Homogeneous Neural Networks have the same network structure, a common practice is to configure parameters under algorithm_parameters, which is shared across all parties. The basic structure is:

{

"config_type": "nn",

"nn_define": [layer1, layer2, ...]

"batch_size": -1,

"optimizer": optimizer,

"early_stop": {

"early_stop": early_stop_type,

"eps": 1e-4

},

"loss": loss,

"metrics": [metrics1, metrics2, ...],

"max_iter": 10

}

-

nn_define

Each layer is represented as an object in json. Please refer to supported layers in Features part. -

optimizer

A json object is needed, please refer to supported optimizers in Features part. -

loss

A string is needed, please refer to supported losses in Features part. -

others

-

batch_size: a positive integer or -1 for full batch

- max_iter: max aggregation number, a positive integer

- early_stop: diff or abs

- metrics: a string name, refer to metrics doc, such as Accuracy, AUC ...

Examples¶

Example

## Homo Neural Networddk Pipeline Example Usage Guide.

#### Example Tasks

This section introduces the Pipeline scripts for different types of tasks.

1. Single layer Task:

script: pipeline_homo_nn_single_layer.py

2. Multi layer Task:

script: pipeline_homo_nn_multi_layer.py

3. Multi label and multi host Task:

script: pipeline_homo_nn_multi_label.py

Users can run a pipeline job directly:

python ${pipeline_script}

homo_nn_testsuite.json

{

"data": [

{

"file": "examples/data/breast_homo_guest.csv",

"head": 1,

"partition": 16,

"table_name": "breast_homo_guest",

"namespace": "experiment",

"role": "guest_0"

},

{

"file": "examples/data/breast_homo_host.csv",

"head": 1,

"partition": 16,

"table_name": "breast_homo_host",

"namespace": "experiment",

"role": "host_0"

},

{

"file": "examples/data/vehicle_scale_homo_guest.csv",

"head": 1,

"partition": 16,

"table_name": "vehicle_scale_homo_guest",

"namespace": "experiment",

"role": "guest_0"

},

{

"file": "examples/data/vehicle_scale_homo_host.csv",

"head": 1,

"partition": 16,

"table_name": "vehicle_scale_homo_host",

"namespace": "experiment",

"role": "host_0"

},

{

"file": "examples/data/student_homo_guest.csv",

"head": 1,

"partition": 16,

"table_name": "student_homo_guest",

"namespace": "experiment",

"role": "guest_0"

},

{

"file": "examples/data/student_homo_host.csv",

"head": 1,

"partition": 16,

"table_name": "student_homo_host",

"namespace": "experiment",

"role": "host_0"

}

],

"pipeline_tasks": {

"binary": {

"script": "./pipeline_homo_nn_train_binary.py"

},

"multi": {

"script": "./pipeline_homo_nn_train_multi.py"

},

"regression": {

"script": "./pipeline_homo_nn_train_regression.py"

},

"aggregate_every_n_epoch": {

"script": "./pipeline_homo_nn_aggregate_n_epoch.py"

}

}

}

pipeline_homo_nn_train_regression.py

import argparse

# torch

import torch as t

from torch import nn

from pipeline import fate_torch_hook

# pipeline

from pipeline.backend.pipeline import PipeLine

from pipeline.component import Reader, DataTransform, HomoNN, Evaluation

from pipeline.component.nn import TrainerParam

from pipeline.interface import Data

from pipeline.utils.tools import load_job_config

fate_torch_hook(t)

def main(config="../../config.yaml", namespace=""):

# obtain config

if isinstance(config, str):

config = load_job_config(config)

parties = config.parties

guest = parties.guest[0]

host = parties.host[0]

arbiter = parties.arbiter[0]

pipeline = PipeLine().set_initiator(role='guest', party_id=guest).set_roles(guest=guest, host=host, arbiter=arbiter)

train_data_0 = {"name": "student_homo_guest", "namespace": "experiment"}

train_data_1 = {"name": "student_homo_host", "namespace": "experiment"}

reader_0 = Reader(name="reader_0")

reader_0.get_party_instance(role='guest', party_id=guest).component_param(table=train_data_0)

reader_0.get_party_instance(role='host', party_id=host).component_param(table=train_data_1)

data_transform_0 = DataTransform(name='data_transform_0')

data_transform_0.get_party_instance(

role='guest', party_id=guest).component_param(

with_label=True, output_format="dense")

data_transform_0.get_party_instance(

role='host', party_id=host).component_param(

with_label=True, output_format="dense")

model = nn.Sequential(

nn.Linear(13, 1)

)

loss = nn.MSELoss()

optimizer = t.optim.Adam(model.parameters(), lr=0.01)

nn_component = HomoNN(name='nn_0',

model=model,

loss=loss,

optimizer=optimizer,

trainer=TrainerParam(trainer_name='fedavg_trainer', epochs=20, batch_size=128,

validation_freqs=1),

torch_seed=100

)

pipeline.add_component(reader_0)

pipeline.add_component(data_transform_0, data=Data(data=reader_0.output.data))

pipeline.add_component(nn_component, data=Data(train_data=data_transform_0.output.data))

pipeline.add_component(Evaluation(name='eval_0', eval_type='regression'), data=Data(data=nn_component.output.data))

pipeline.compile()

pipeline.fit()

if __name__ == "__main__":

parser = argparse.ArgumentParser("PIPELINE DEMO")

parser.add_argument("-config", type=str,

help="config file")

args = parser.parse_args()

if args.config is not None:

main(args.config)

else:

main()

pipeline_homo_nn_train_multi.py

import argparse

# torch

import torch as t

from torch import nn

from pipeline import fate_torch_hook

# pipeline

from pipeline.backend.pipeline import PipeLine

from pipeline.component import Reader, DataTransform, HomoNN, Evaluation

from pipeline.component.nn import TrainerParam, DatasetParam

from pipeline.interface import Data

from pipeline.utils.tools import load_job_config

fate_torch_hook(t)

def main(config="../../config.yaml", namespace=""):

# obtain config

if isinstance(config, str):

config = load_job_config(config)

parties = config.parties

guest = parties.guest[0]

host = parties.host[0]

arbiter = parties.arbiter[0]

pipeline = PipeLine().set_initiator(role='guest', party_id=guest).set_roles(guest=guest, host=host, arbiter=arbiter)

train_data_0 = {"name": "vehicle_scale_homo_guest", "namespace": "experiment"}

train_data_1 = {"name": "vehicle_scale_homo_host", "namespace": "experiment"}

reader_0 = Reader(name="reader_0")

reader_0.get_party_instance(role='guest', party_id=guest).component_param(table=train_data_0)

reader_0.get_party_instance(role='host', party_id=host).component_param(table=train_data_1)

data_transform_0 = DataTransform(name='data_transform_0')

data_transform_0.get_party_instance(

role='guest', party_id=guest).component_param(

with_label=True, output_format="dense")

data_transform_0.get_party_instance(

role='host', party_id=host).component_param(

with_label=True, output_format="dense")

model = nn.Sequential(

nn.Linear(18, 4),

nn.Softmax(dim=1) # actually cross-entropy loss does the softmax

)

loss = nn.CrossEntropyLoss()

optimizer = t.optim.Adam(model.parameters(), lr=0.01)

nn_component = HomoNN(name='nn_0',

model=model,

loss=loss,

optimizer=optimizer,

trainer=TrainerParam(trainer_name='fedavg_trainer', epochs=50, batch_size=128,

validation_freqs=1),

# reshape and set label to long for CrossEntropyLoss

dataset=DatasetParam(dataset_name='table', flatten_label=True, label_dtype='long'),

torch_seed=100

)

pipeline.add_component(reader_0)

pipeline.add_component(data_transform_0, data=Data(data=reader_0.output.data))

pipeline.add_component(nn_component, data=Data(train_data=data_transform_0.output.data))

pipeline.add_component(Evaluation(name='eval_0', eval_type='multi'), data=Data(data=nn_component.output.data))

pipeline.compile()

pipeline.fit()

if __name__ == "__main__":

parser = argparse.ArgumentParser("PIPELINE DEMO")

parser.add_argument("-config", type=str,

help="config file")

args = parser.parse_args()

if args.config is not None:

main(args.config)

else:

main()

pipeline_homo_nn_aggregate_n_epoch.py

import argparse

# torch

import torch as t

from torch import nn

from pipeline import fate_torch_hook

# pipeline

from pipeline.backend.pipeline import PipeLine

from pipeline.component import Reader, DataTransform, HomoNN, Evaluation

from pipeline.component.nn import TrainerParam

from pipeline.interface import Data

from pipeline.utils.tools import load_job_config

fate_torch_hook(t)

def main(config="../../config.yaml", namespace=""):

# obtain config

if isinstance(config, str):

config = load_job_config(config)

parties = config.parties

guest = parties.guest[0]

host = parties.host[0]

arbiter = parties.arbiter[0]

pipeline = PipeLine().set_initiator(role='guest', party_id=guest).set_roles(guest=guest, host=host, arbiter=arbiter)

train_data_0 = {"name": "breast_homo_guest", "namespace": "experiment"}

train_data_1 = {"name": "breast_homo_host", "namespace": "experiment"}

reader_0 = Reader(name="reader_0")

reader_0.get_party_instance(role='guest', party_id=guest).component_param(table=train_data_0)

reader_0.get_party_instance(role='host', party_id=host).component_param(table=train_data_1)

data_transform_0 = DataTransform(name='data_transform_0')

data_transform_0.get_party_instance(

role='guest', party_id=guest).component_param(

with_label=True, output_format="dense")

data_transform_0.get_party_instance(

role='host', party_id=host).component_param(

with_label=True, output_format="dense")

model = nn.Sequential(

nn.Linear(30, 1),

nn.Sigmoid()

)

loss = nn.BCELoss()

optimizer = t.optim.Adam(model.parameters(), lr=0.01)

nn_component = HomoNN(name='nn_0',

model=model,

loss=loss,

optimizer=optimizer,

trainer=TrainerParam(trainer_name='fedavg_trainer', epochs=20, batch_size=128,

validation_freqs=1, aggregate_every_n_epoch=5),

torch_seed=100

)

pipeline.add_component(reader_0)

pipeline.add_component(data_transform_0, data=Data(data=reader_0.output.data))

pipeline.add_component(nn_component, data=Data(train_data=data_transform_0.output.data))

pipeline.add_component(Evaluation(name='eval_0'), data=Data(data=nn_component.output.data))

pipeline.compile()

pipeline.fit()

if __name__ == "__main__":

parser = argparse.ArgumentParser("PIPELINE DEMO")

parser.add_argument("-config", type=str,

help="config file")

args = parser.parse_args()

if args.config is not None:

main(args.config)

else:

main()

pipeline_homo_nn_train_binary.py

import argparse

# torch

import torch as t

from torch import nn

from pipeline import fate_torch_hook

# pipeline

from pipeline.backend.pipeline import PipeLine

from pipeline.component import Reader, DataTransform, HomoNN, Evaluation

from pipeline.component.nn import TrainerParam

from pipeline.interface import Data

from pipeline.utils.tools import load_job_config

fate_torch_hook(t)

def main(config="../../config.yaml", namespace=""):

# obtain config

if isinstance(config, str):

config = load_job_config(config)

parties = config.parties

guest = parties.guest[0]

host = parties.host[0]

arbiter = parties.arbiter[0]

pipeline = PipeLine().set_initiator(role='guest', party_id=guest).set_roles(guest=guest, host=host, arbiter=arbiter)

train_data_0 = {"name": "breast_homo_guest", "namespace": "experiment"}

train_data_1 = {"name": "breast_homo_host", "namespace": "experiment"}

reader_0 = Reader(name="reader_0")

reader_0.get_party_instance(role='guest', party_id=guest).component_param(table=train_data_0)

reader_0.get_party_instance(role='host', party_id=host).component_param(table=train_data_1)

data_transform_0 = DataTransform(name='data_transform_0')

data_transform_0.get_party_instance(

role='guest', party_id=guest).component_param(

with_label=True, output_format="dense")

data_transform_0.get_party_instance(

role='host', party_id=host).component_param(

with_label=True, output_format="dense")

model = nn.Sequential(

nn.Linear(30, 1),

nn.Sigmoid()

)

loss = nn.BCELoss()

optimizer = t.optim.Adam(model.parameters(), lr=0.01)

nn_component = HomoNN(name='nn_0',

model=model,

loss=loss,

optimizer=optimizer,

trainer=TrainerParam(trainer_name='fedavg_trainer', epochs=20, batch_size=128,

validation_freqs=1),

torch_seed=100

)

pipeline.add_component(reader_0)

pipeline.add_component(data_transform_0, data=Data(data=reader_0.output.data))

pipeline.add_component(nn_component, data=Data(train_data=data_transform_0.output.data))

pipeline.add_component(Evaluation(name='eval_0'), data=Data(data=nn_component.output.data))

pipeline.compile()

pipeline.fit()

if __name__ == "__main__":

parser = argparse.ArgumentParser("PIPELINE DEMO")

parser.add_argument("-config", type=str,

help="config file")

args = parser.parse_args()

if args.config is not None:

main(args.config)

else:

main()

## Homo Logistic Regression Configuration Usage Guide.

This section introduces the dsl and conf for usage of different type of task.

#### Example Task.

1. Binary Train Task:

dsl: homo_nn_train_binary_dsl.json

runtime_config : homo_nn_train_binary_conf.json

2. Multi Train Task:

dsl: homo_nn_train_multi_dsl.json

runtime_config: homo_nn_train_multi_conf.json

3. Binary Task and Aggregate every N epoch:

dsl: homo_nn_aggregate_n_epoch_dsl.json

runtime_config: homo_nn_aggregate_n_epoch_conf.json

4. Regression Task:

dsl: homo_nn_train_regression_dsl.json

conf: homo_nn_train_regression_conf.json

Users can use following commands to running the task.

flow job submit -c ${runtime_config} -d ${dsl}

After having finished a successful training task, you can use it to predict, you can use the obtained model to perform prediction. You need to add the corresponding model id and model version to the configuration [file](./hetero-lr-normal-predict-conf.json)

homo_nn_testsuite.json

{

"data": [

{

"file": "examples/data/breast_homo_guest.csv",

"head": 1,

"partition": 16,

"table_name": "breast_homo_guest",

"namespace": "experiment",

"role": "guest_0"

},

{

"file": "examples/data/breast_homo_host.csv",

"head": 1,

"partition": 16,

"table_name": "breast_homo_host",

"namespace": "experiment",

"role": "host_0"

},

{

"file": "examples/data/vehicle_scale_homo_guest.csv",

"head": 1,

"partition": 16,

"table_name": "vehicle_scale_homo_guest",

"namespace": "experiment",

"role": "guest_0"

},

{

"file": "examples/data/vehicle_scale_homo_host.csv",

"head": 1,

"partition": 16,

"table_name": "vehicle_scale_homo_host",

"namespace": "experiment",

"role": "host_0"

},

{

"file": "examples/data/student_homo_guest.csv",

"head": 1,

"partition": 16,

"table_name": "student_homo_guest",

"namespace": "experiment",

"role": "guest_0"

},

{

"file": "examples/data/student_homo_host.csv",

"head": 1,

"partition": 16,

"table_name": "student_homo_host",

"namespace": "experiment",

"role": "host_0"

}

],

"tasks": {

"homo_nn_train_regression": {

"conf": "homo_nn_train_regression_conf.json",

"dsl": "homo_nn_train_regression_dsl.json"

},

"homo_nn_train_binary": {

"conf": "homo_nn_train_binary_conf.json",

"dsl": "homo_nn_train_binary_dsl.json"

},

"homo_nn_train_multi": {

"conf": "homo_nn_train_multi_conf.json",

"dsl": "homo_nn_train_multi_dsl.json"

},

"homo_nn_aggregate_n_epoch": {

"conf": "homo_nn_aggregate_n_epoch_conf.json",

"dsl": "homo_nn_aggregate_n_epoch_dsl.json"

}

}

}

homo_nn_train_regression_conf.json

{

"dsl_version": 2,

"initiator": {

"role": "guest",

"party_id": 9999

},

"role": {

"guest": [

9999

],

"host": [

10000

],

"arbiter": [

10000

]

},

"job_parameters": {

"common": {

"job_type": "train"

}

},

"component_parameters": {

"role": {

"guest": {

"0": {

"reader_0": {

"table": {

"name": "student_homo_guest",

"namespace": "experiment"

}

},

"data_transform_0": {

"with_label": true,

"output_format": "dense"

}

}

},

"host": {

"0": {

"reader_0": {

"table": {

"name": "student_homo_host",

"namespace": "experiment"

}

},

"data_transform_0": {

"with_label": true,

"output_format": "dense"

}

}

}

},

"common": {

"nn_0": {

"loss": {

"size_average": null,

"reduce": null,

"reduction": "mean",

"loss_fn": "MSELoss"

},

"optimizer": {

"lr": 0.01,

"betas": [

0.9,

0.999

],

"eps": 1e-08,

"weight_decay": 0,

"amsgrad": false,

"optimizer": "Adam",

"config_type": "pytorch"

},

"trainer": {

"trainer_name": "fedavg_trainer",

"param": {

"epochs": 20,

"batch_size": 128,

"validation_freqs": 1

}

},

"torch_seed": 100,

"nn_define": {

"0-0": {

"bias": true,

"device": null,

"dtype": null,

"in_features": 13,

"out_features": 1,

"layer": "Linear",

"initializer": {}

}

}

},

"eval_0": {

"eval_type": "regression"

}

}

}

}

homo_nn_train_binary_conf.json

{

"dsl_version": 2,

"initiator": {

"role": "guest",

"party_id": 9999

},

"role": {

"guest": [

9999

],

"host": [

10000

],

"arbiter": [

10000

]

},

"job_parameters": {

"common": {

"job_type": "train"

}

},

"component_parameters": {

"role": {

"host": {

"0": {

"reader_0": {

"table": {

"name": "breast_homo_host",

"namespace": "experiment"

}

},

"data_transform_0": {

"with_label": true,

"output_format": "dense"

}

}

},

"guest": {

"0": {

"reader_0": {

"table": {

"name": "breast_homo_guest",

"namespace": "experiment"

}

},

"data_transform_0": {

"with_label": true,

"output_format": "dense"

}

}

}

},

"common": {

"nn_0": {

"loss": {

"weight": null,

"size_average": null,

"reduce": null,

"reduction": "mean",

"loss_fn": "BCELoss"

},

"optimizer": {

"lr": 0.01,

"betas": [

0.9,

0.999

],

"eps": 1e-08,

"weight_decay": 0,

"amsgrad": false,

"optimizer": "Adam",

"config_type": "pytorch"

},

"trainer": {

"trainer_name": "fedavg_trainer",

"param": {

"epochs": 20,

"batch_size": 128,

"validation_freqs": 1

}

},

"torch_seed": 100,

"nn_define": {

"0-0": {

"bias": true,

"device": null,

"dtype": null,

"in_features": 30,

"out_features": 1,

"layer": "Linear",

"initializer": {}

},

"1-1": {

"layer": "Sigmoid",

"initializer": {}

}

}

}

}

}

}

homo_nn_aggregate_n_epoch_conf.json

{

"dsl_version": 2,

"initiator": {

"role": "guest",

"party_id": 9999

},

"role": {

"guest": [

9999

],

"host": [

10000

],

"arbiter": [

10000

]

},

"job_parameters": {

"common": {

"job_type": "train"

}

},

"component_parameters": {

"role": {

"guest": {

"0": {

"data_transform_0": {

"with_label": true,

"output_format": "dense"

},

"reader_0": {

"table": {

"name": "breast_homo_guest",

"namespace": "experiment"

}

}

}

},

"host": {

"0": {

"data_transform_0": {

"with_label": true,

"output_format": "dense"

},

"reader_0": {

"table": {

"name": "breast_homo_host",

"namespace": "experiment"

}

}

}

}

},

"common": {

"nn_0": {

"loss": {

"weight": null,

"size_average": null,

"reduce": null,

"reduction": "mean",

"loss_fn": "BCELoss"

},

"optimizer": {

"lr": 0.01,

"betas": [

0.9,

0.999

],

"eps": 1e-08,

"weight_decay": 0,

"amsgrad": false,

"optimizer": "Adam",

"config_type": "pytorch"

},

"trainer": {

"trainer_name": "fedavg_trainer",

"param": {

"epochs": 20,

"batch_size": 128,

"validation_freqs": 1,

"aggregate_every_n_epoch": 5

}

},

"torch_seed": 100,

"nn_define": {

"0-0": {

"bias": true,

"device": null,

"dtype": null,

"in_features": 30,

"out_features": 1,

"layer": "Linear",

"initializer": {}

},

"1-1": {

"layer": "Sigmoid",

"initializer": {}

}

}

}

}

}

}

homo_nn_train_binary_dsl.json

{

"components": {

"reader_0": {

"module": "Reader",

"output": {

"data": [

"data"

]

},

"provider": "fate_flow"

},

"data_transform_0": {

"module": "DataTransform",

"input": {

"data": {

"data": [

"reader_0.data"

]

}

},

"output": {

"data": [

"data"

],

"model": [

"model"

]

},

"provider": "fate"

},

"nn_0": {

"module": "HomoNN",

"input": {

"data": {

"train_data": [

"data_transform_0.data"

]

}

},

"output": {

"data": [

"data"

],

"model": [

"model"

]

},

"provider": "fate"

},

"eval_0": {

"module": "Evaluation",

"input": {

"data": {

"data": [

"nn_0.data"

]

}

},

"output": {

"data": [

"data"

]

},

"provider": "fate"

}

}

}

homo_nn_train_multi_dsl.json

{

"components": {

"reader_0": {

"module": "Reader",

"output": {

"data": [

"data"

]

},

"provider": "fate_flow"

},

"data_transform_0": {

"module": "DataTransform",

"input": {

"data": {

"data": [

"reader_0.data"

]

}

},

"output": {

"data": [

"data"

],

"model": [

"model"

]

},

"provider": "fate"

},

"nn_0": {

"module": "HomoNN",

"input": {

"data": {

"train_data": [

"data_transform_0.data"

]

}

},

"output": {

"data": [

"data"

],

"model": [

"model"

]

},

"provider": "fate"

},

"eval_0": {

"module": "Evaluation",

"input": {

"data": {

"data": [

"nn_0.data"

]

}

},

"output": {

"data": [

"data"

]

},

"provider": "fate"

}

}

}

homo_nn_train_regression_dsl.json

{

"components": {

"reader_0": {

"module": "Reader",

"output": {

"data": [

"data"

]

},

"provider": "fate_flow"

},

"data_transform_0": {

"module": "DataTransform",

"input": {

"data": {

"data": [

"reader_0.data"

]

}

},

"output": {

"data": [

"data"

],

"model": [

"model"

]

},

"provider": "fate"

},

"nn_0": {

"module": "HomoNN",

"input": {

"data": {

"train_data": [

"data_transform_0.data"

]

}

},

"output": {

"data": [

"data"

],

"model": [

"model"

]

},

"provider": "fate"

},

"eval_0": {

"module": "Evaluation",

"input": {

"data": {

"data": [

"nn_0.data"

]

}

},

"output": {

"data": [

"data"

]

},

"provider": "fate"

}

}

}

homo_nn_aggregate_n_epoch_dsl.json

{

"components": {

"reader_0": {

"module": "Reader",

"output": {

"data": [

"data"

]

},

"provider": "fate_flow"

},

"data_transform_0": {

"module": "DataTransform",

"input": {

"data": {

"data": [

"reader_0.data"

]

}

},

"output": {

"data": [

"data"

],

"model": [

"model"

]

},

"provider": "fate"

},

"nn_0": {

"module": "HomoNN",

"input": {

"data": {

"train_data": [

"data_transform_0.data"

]

}

},

"output": {

"data": [

"data"

],

"model": [

"model"

]

},

"provider": "fate"

},

"eval_0": {

"module": "Evaluation",

"input": {

"data": {

"data": [

"nn_0.data"

]

}

},

"output": {

"data": [

"data"

]

},

"provider": "fate"

}

}

}

homo_nn_train_multi_conf.json

{

"dsl_version": 2,

"initiator": {

"role": "guest",

"party_id": 9999

},

"role": {

"guest": [

9999

],

"host": [

10000

],

"arbiter": [

10000

]

},

"job_parameters": {

"common": {

"job_type": "train"

}

},

"component_parameters": {

"role": {

"guest": {

"0": {

"reader_0": {

"table": {

"name": "vehicle_scale_homo_guest",

"namespace": "experiment"

}

},

"data_transform_0": {

"with_label": true,

"output_format": "dense"

}

}

},

"host": {

"0": {

"reader_0": {

"table": {

"name": "vehicle_scale_homo_host",

"namespace": "experiment"

}

},

"data_transform_0": {

"with_label": true,

"output_format": "dense"

}

}

}

},

"common": {

"nn_0": {

"loss": {

"weight": null,

"size_average": null,

"ignore_index": -100,

"reduce": null,

"reduction": "mean",

"label_smoothing": 0.0,

"loss_fn": "CrossEntropyLoss"

},

"optimizer": {

"lr": 0.01,

"betas": [

0.9,

0.999

],

"eps": 1e-08,

"weight_decay": 0,

"amsgrad": false,

"optimizer": "Adam",

"config_type": "pytorch"

},

"trainer": {

"trainer_name": "fedavg_trainer",

"param": {

"epochs": 50,

"batch_size": 128,

"validation_freqs": 1

}

},

"dataset": {

"dataset_name": "table",

"param": {

"flatten_label": true,

"label_dtype": "long"

}

},

"torch_seed": 100,

"nn_define": {

"0-0": {

"bias": true,

"device": null,

"dtype": null,

"in_features": 18,

"out_features": 4,

"layer": "Linear",

"initializer": {}

},

"1-1": {

"dim": 1,

"layer": "Softmax",

"initializer": {}

}

}

},

"eval_0": {

"eval_type": "multi"

}

}

}

}